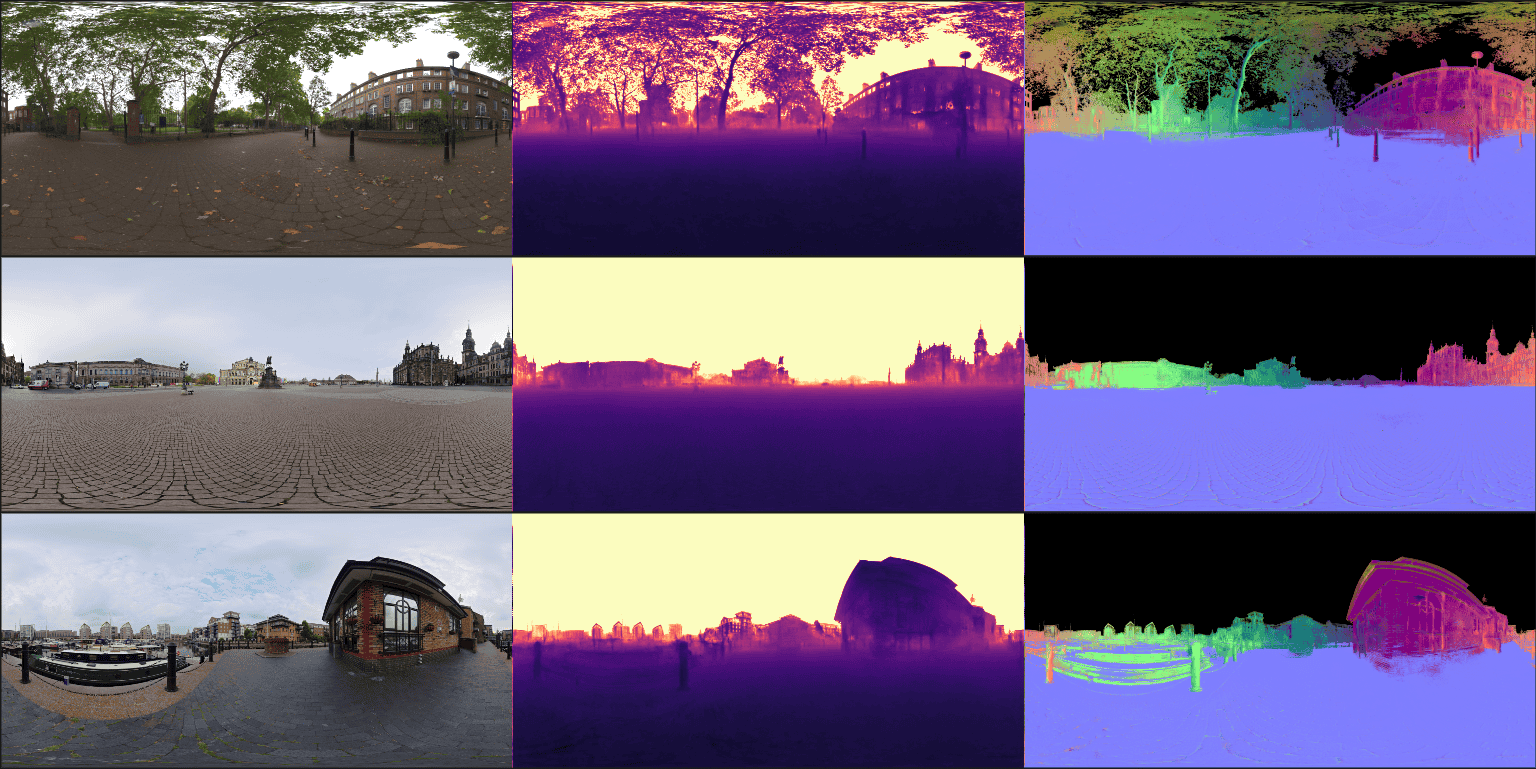

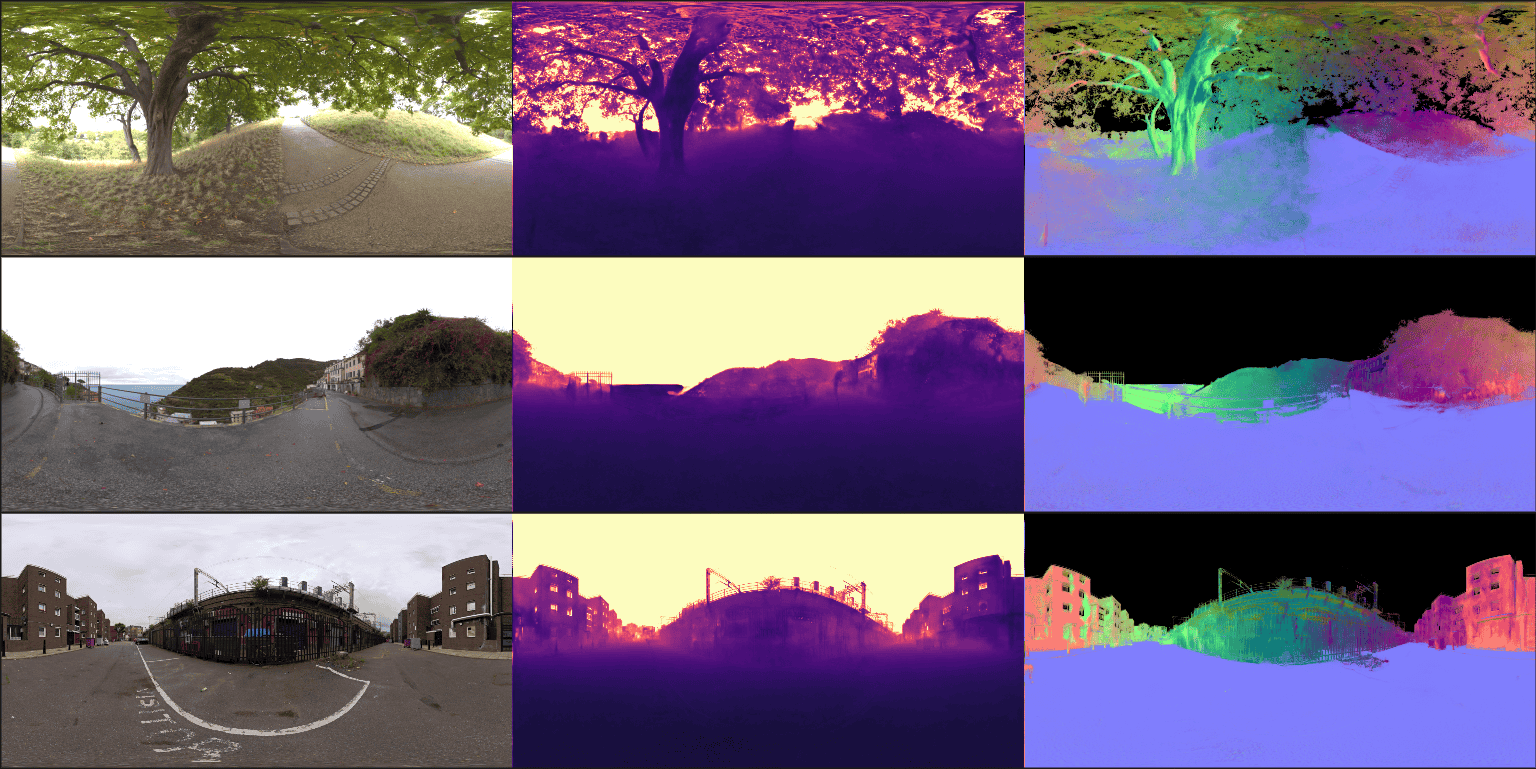

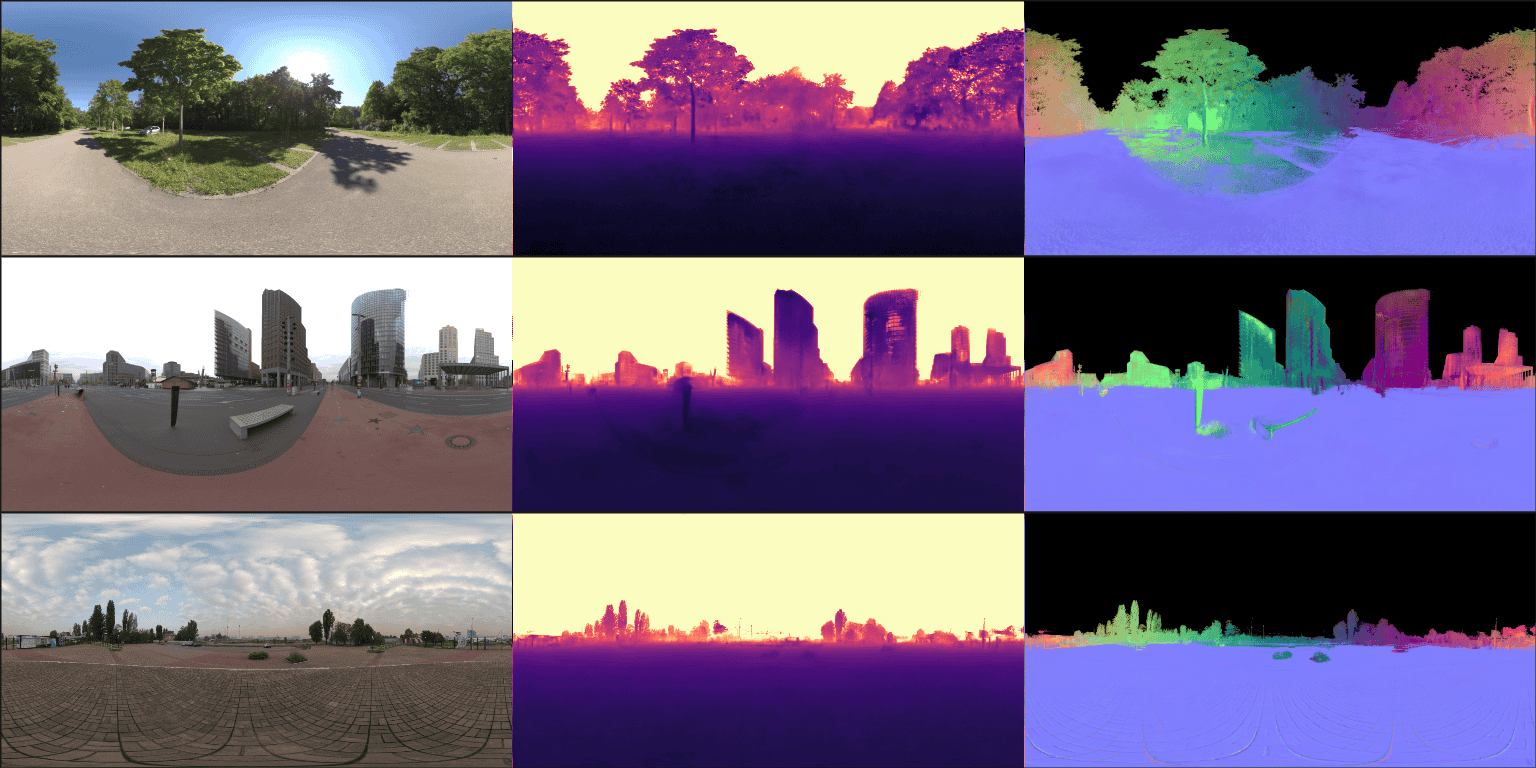

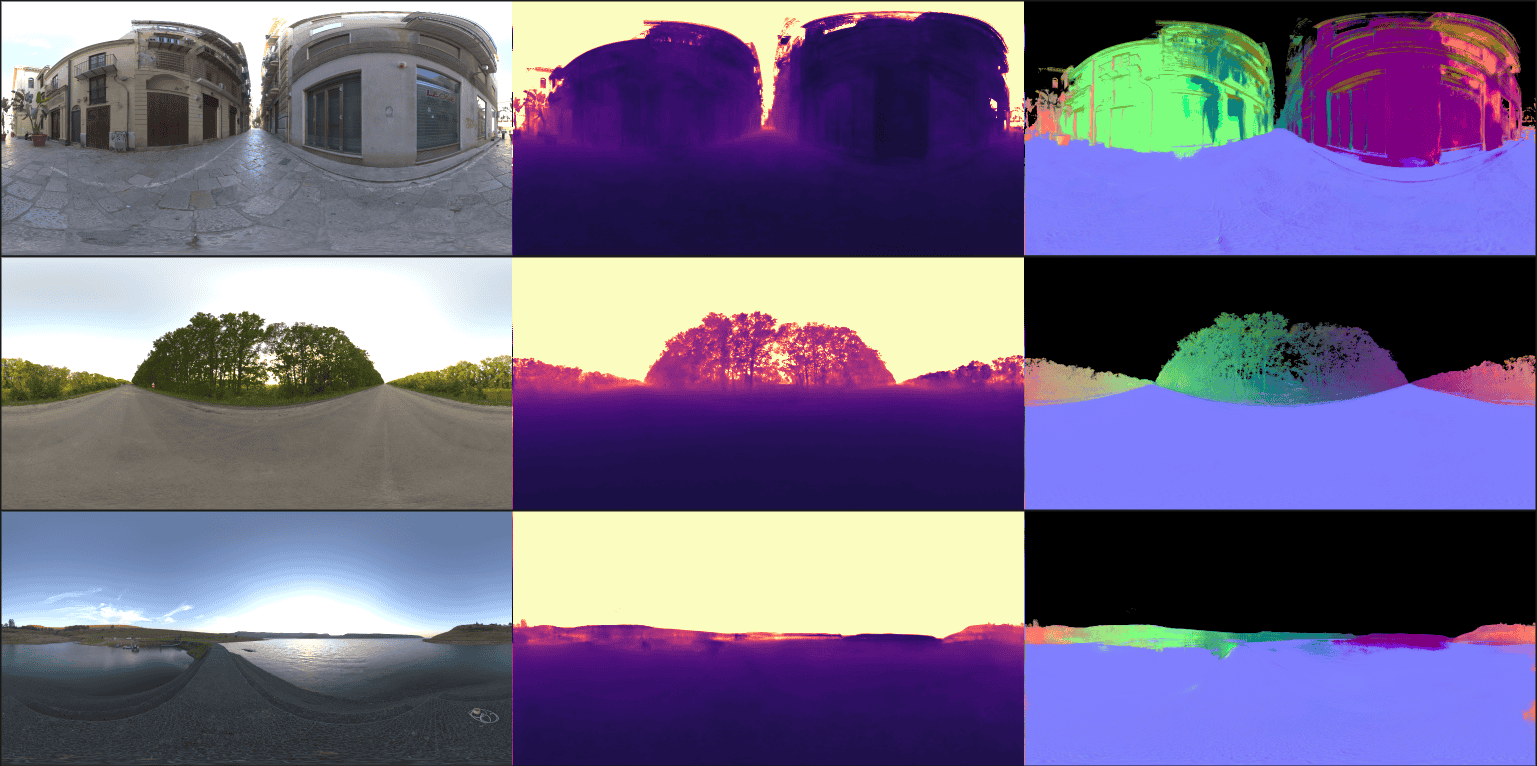

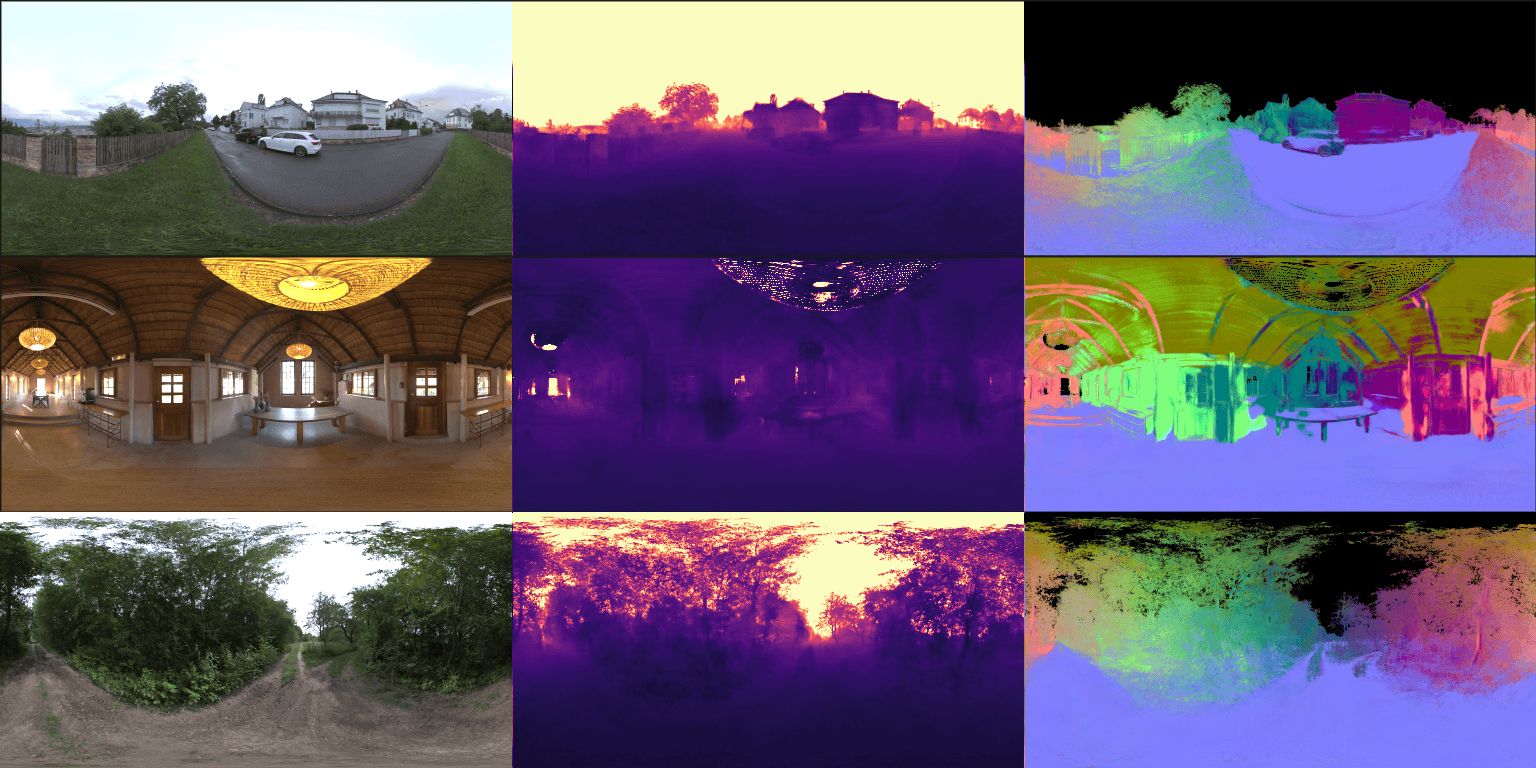

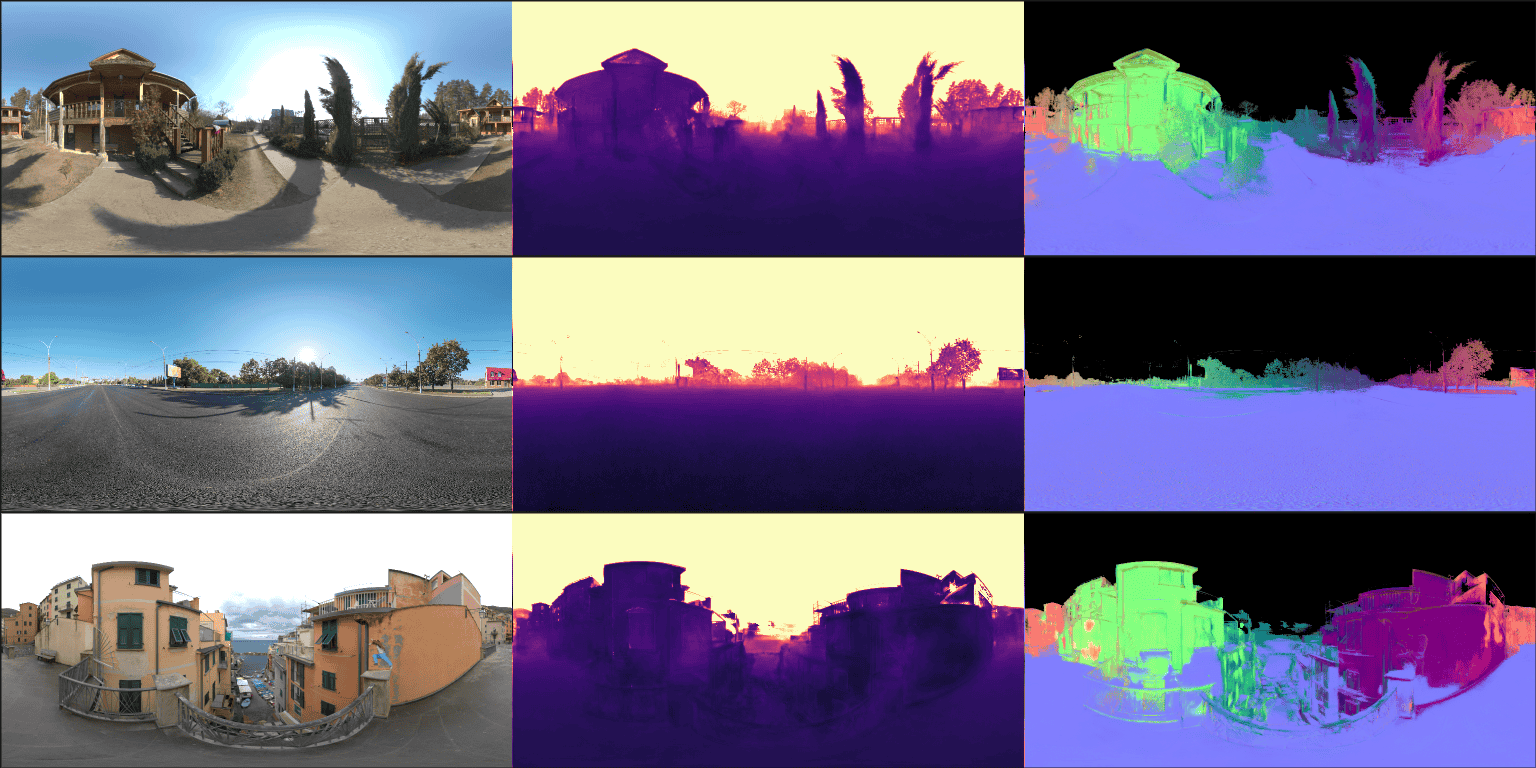

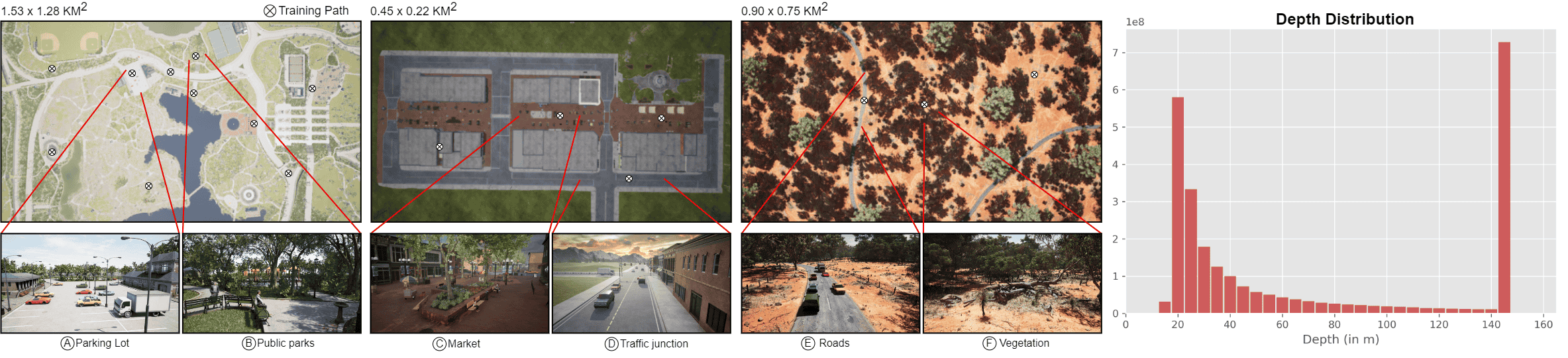

Dataset

OmniHorizon dataset was generated using Unreal Engine 4, featuring color images, scene depth, and world normals in a top-bottom (stereo) format, all rendered at 1024 x 512 resolution. The dataset provides 24,335 omnidirectional views for various outdoor scenarios which includes parks, market, traffic junction, underpass, uneven terrain, buildings, vehicles and pedestrians.